In the rapidly evolving landscape of artificial intelligence, a new frontier of digital manipulation has emerged, bringing with it both technological marvel and profound ethical dilemmas. At the forefront of this contentious space is Clothoff.io, an application that has garnered significant attention for its startling ability to "undress anyone using AI." This controversial tool, which claims to offer users the power to digitally remove clothing from images, has sparked widespread debate, raising critical questions about privacy, consent, and the responsible use of advanced AI.

As AI technology becomes increasingly accessible, the line between innovation and exploitation blurs. Clothoff.io exemplifies this tension, drawing millions of monthly visitors eager to explore its capabilities. However, beneath the surface of technological novelty lies a complex web of ethical concerns, legal ambiguities, and a concerted effort by its creators to remain anonymous. This article delves into the intricacies of Clothoff.io, examining its operational model, the identities (or lack thereof) behind its creation, its impact on individuals and society, and the broader implications for the future of AI-generated content.

Table of Contents

- Understanding Clothoff.io: The AI "Undressing" Phenomenon

- The Controversial Technology Behind Clothoff.io

- Unmasking the Creators: The Elusive Identities Behind Clothoff.io

- The Ethical and Legal Minefield of Deepfake Apps

- User Experience and Community Engagement

- The Business Model and Monetization of Clothoff.io

- Navigating the Digital Landscape: Protecting Yourself

- The Future of AI-Generated Content and Deepfakes

Understanding Clothoff.io: The AI "Undressing" Phenomenon

Clothoff.io is an online platform that leverages artificial intelligence to create what are commonly referred to as "deepfake" images. Specifically, its primary function, as boldly advertised on its website, is to "undress anyone using AI." This capability allows users to upload images and, through the application's algorithms, generate versions of those images where the subject appears to be nude. The technology behind this process is sophisticated, utilizing generative adversarial networks (GANs) or similar AI models that have been trained on vast datasets of images to learn how to realistically alter human figures and clothing.

The sheer scale of its reach is noteworthy; Clothoff.io's website reportedly receives more than 4 million monthly visits. This significant traffic underscores a substantial public interest, or perhaps curiosity, in such AI-powered manipulation tools. While the app positions itself as a technological novelty, its core function immediately raises red flags, particularly when it is widely described as a "deepfake pornography app" by reputable sources such as theguardian.com. This classification highlights the non-consensual nature of many of the images generated and the severe ethical and legal ramifications that follow. The ease with which such an app can be accessed—simply by scanning a QR code to download or checking app stores—further amplifies the concerns surrounding its widespread availability and potential for misuse.

The Controversial Technology Behind Clothoff.io

At its core, Clothoff.io relies on advanced AI algorithms, likely a form of generative AI, to perform its "undressing" function. These algorithms are trained on extensive datasets, enabling them to understand human anatomy, clothing textures, and how light interacts with surfaces. When an image is uploaded, the AI analyzes the subject and then generates a new version, fabricating what would be underneath the clothing. The realism of these generated images can be unsettling, making it difficult for an untrained eye to distinguish them from genuine photographs.

The very existence of apps like Clothoff.io underscores a critical divide in the AI development community. On one side are developers who prioritize ethical AI, building in strict safeguards to prevent the generation of harmful or illicit content. For instance, many responsible AI image generation platforms will "very strictly prevent the AI from generating an image if it likely contains" sensitive or inappropriate material, particularly anything resembling non-consensual sexual imagery. These platforms employ content filters, moderation systems, and user guidelines to ensure their technology is used responsibly.

- El Mejor Consejo Video Twitter

- Freddy Torres Twitter

- Gay Spit Kissing

- Conspiracybot Twitter

- Frosty Twitter

Conversely, apps like Clothoff.io appear to either bypass these ethical considerations entirely or are designed specifically to circumvent them. Their functionality directly contradicts the principles of consent and privacy, turning powerful AI tools into instruments of digital harm. This deliberate design choice makes them highly controversial and raises urgent questions about accountability in the rapidly expanding field of AI innovation. The technology itself is neutral, but its application by Clothoff.io is anything but.

Unmasking the Creators: The Elusive Identities Behind Clothoff.io

One of the most striking aspects of Clothoff.io is the concerted effort by its creators to remain anonymous. Investigations into the app's financial transactions have "revealed the lengths the app’s creators have taken to disguise their identities." This anonymity is not merely a matter of personal preference; it serves as a shield against accountability for the potentially illegal and harmful content generated by the app. In the digital age, where lines of code can have real-world consequences, the ability of developers to operate in the shadows poses a significant challenge to law enforcement and ethical oversight.

The pursuit of anonymity by those behind such controversial applications is a common tactic. It complicates legal action, makes it difficult to enforce cease-and-desist orders, and allows the app to continue operating even as public outcry grows. This deliberate obfuscation of identity underscores the creators' awareness of the problematic nature of their product and their desire to evade the repercussions that would typically accompany the distribution of non-consensual deepfake content.

Texture Oasis: A London-Registered Firm

Despite the creators' efforts to conceal their identities, financial "transactions led to a company registered in London called Texture Oasis, a firm." This revelation provides a crucial, albeit limited, link to the real-world entities behind Clothoff.io. The registration of a company, even if it acts as a shell or intermediary, provides a legal footprint that can potentially be traced. However, the nature of such firms often involves layers of corporate structures designed to obscure the ultimate beneficial owners.

The existence of Texture Oasis suggests a deliberate, organized effort to monetize the Clothoff.io platform. Registering a company in a jurisdiction like London, known for its robust financial and legal frameworks, might lend an air of legitimacy to the operation while still allowing for a degree of separation from the direct operation of the controversial app. This legal registration provides a starting point for investigations into the app's funding, management, and the individuals who ultimately profit from its use, even if the path remains complex and challenging to navigate.

The Ethical and Legal Minefield of Deepfake Apps

The ethical and legal implications of deepfake apps like Clothoff.io are vast and deeply concerning, touching upon fundamental rights such as privacy, consent, and reputation. The primary harm stems from the creation and dissemination of non-consensual deepfake pornography, which is a severe violation of an individual's dignity and autonomy. Victims often experience profound psychological distress, reputational damage, and social stigma. The ease with which such content can be created and shared means that anyone, from private citizens to public figures, can become a target.

Consider the comment regarding a celebrity like Xiaoting: "I'm amazed that Xiaoting's agency let her stay since she will be making way more money with her current popularity in China and I know she will be attending Chinese reality show." While this specific comment isn't directly about Clothoff.io, it highlights the immense pressure and scrutiny public figures face regarding their image and career. The existence of deepfake apps adds another layer of vulnerability, as fabricated images can quickly spread, impacting a celebrity's public perception, endorsements, and even their ability to participate in shows or maintain their career. Agencies must now contend with not just real controversies, but digitally manufactured ones, making image management exponentially more complex and critical. The very notion of "flexing your competitive side" takes on a sinister meaning when it involves the non-consensual creation of intimate images.

Legally, many jurisdictions are struggling to keep pace with the rapid advancement of deepfake technology. While some countries have begun to enact laws specifically criminalizing the creation and distribution of non-consensual deepfakes, enforcement remains challenging due to the anonymous nature of many platforms and the global reach of the internet. The "names linked to Clothoff, the deepfake pornography app" appearing in news reports like The Guardian underscore the urgent need for robust legal frameworks and international cooperation to combat this emerging form of digital abuse.

Preventing Misuse: AI's Own Safeguards

In stark contrast to apps like Clothoff.io, many ethical AI developers and researchers are actively working on safeguards to prevent the misuse of AI technology. This includes developing robust content moderation systems, implementing watermarking or digital fingerprinting for AI-generated content, and creating detection tools to identify deepfakes. The principle is clear: "of the porn generating AI websites out there right now, from what I know, they will very strictly prevent the AI from generating an image if it likely contains" inappropriate or illegal material.

These safeguards often involve a combination of automated filters, human review, and strict terms of service that prohibit the creation of non-consensual or explicit content. The goal is to ensure that powerful AI tools are used for creative, productive, and ethical purposes, rather than for harm. However, the existence of platforms like Clothoff.io demonstrates that not all developers adhere to these ethical guidelines, creating a continuous cat-and-mouse game between those who seek to prevent misuse and those who exploit the technology for illicit ends. The challenge lies in developing detection methods that are as sophisticated as the generation methods, ensuring that digital hygiene can "get rid of unnecessary things safely and for free" – in this case, harmful deepfakes.

User Experience and Community Engagement

The user experience of Clothoff.io, while ethically problematic, is designed for ease of access and use. The ability to "scan this QR code to download the app now or check it out in the app stores" highlights its accessibility, aiming for widespread adoption. Once downloaded, users are presumably guided through a simple interface to upload images and initiate the AI "undressing" process. The app's appeal, for its user base, lies in its perceived novelty and the controversial power it grants.

Beyond the direct app interface, communities form around such technologies, discussing features, sharing results, and exploring new applications. This digital word-of-mouth contributes significantly to an app's reach and longevity.

The Reddit and Telegram Connection

Online platforms like Reddit and Telegram play a significant role in the dissemination and discussion of AI tools, including those with controversial uses. Reddit, often described as a place where "Reddit gives you the best of the internet in one place," serves as a hub for diverse communities. Users can "get a constantly updating feed of breaking news, fun stories, pics, memes, and videos just for you," and this includes discussions about AI apps. While dedicated communities for Clothoff.io might be small, such as the "1 subscriber in the clothoff_bot community" noted in the data, the broader conversation around AI and deepfakes is extensive. The presence of such a small community also indicates that perhaps the direct bot interaction is less prevalent than the website or app itself, or that discussions are spread across larger, less specific subreddits. The call to "add your thoughts and get the conversation going" is a core tenet of Reddit's design, which can inadvertently facilitate the spread of information about apps like Clothoff.io.

Telegram, with its "37k subscribers in the telegrambots community," is another critical platform. It's a place where users "share your Telegram bots and discover bots other people have made." This environment allows for the easy sharing and discovery of bots, including those that might offer similar deepfake functionalities. The observation that "the bot profile doesn't show much" for certain bots hints at a lack of transparency, which is common for services operating in legally grey areas. The broader "1.2m subscribers in the characterai community" on Reddit also shows the massive public interest in AI that generates content, creating a fertile ground for both ethical and unethical applications to emerge and gain traction.

Alternative AI Image Generation Tools

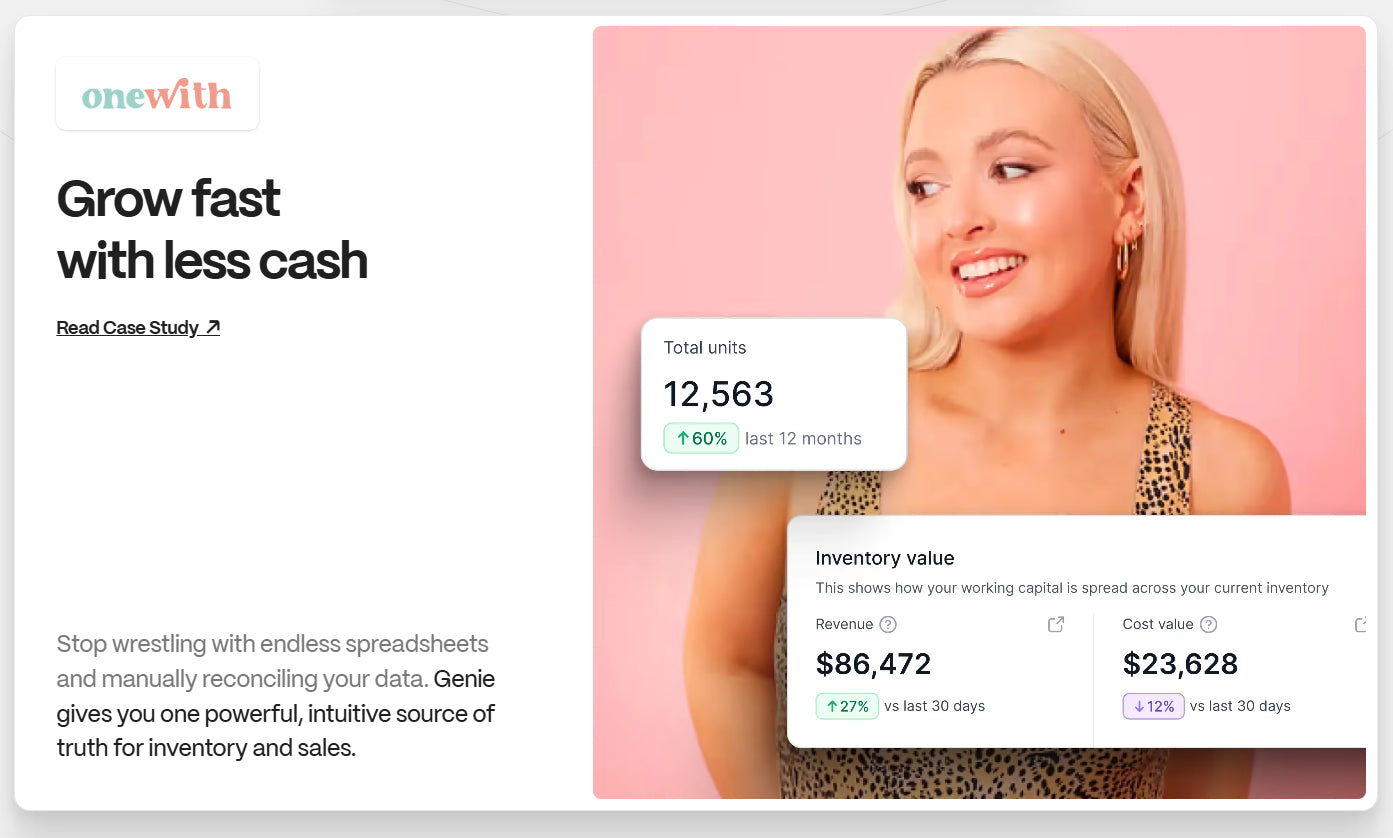

The landscape of AI image generation is vast and competitive. While Clothoff.io occupies a controversial niche, many other AI tools offer image generation capabilities for legitimate and creative purposes. The data mentions, "Consider checking out Muah AI, Unlike some of these options in the comparison, it's absolutely free plus caters an unbeatable speed in photo generation, Talk about a reel deal!" This highlights the existence of alternatives that prioritize accessibility and performance, often without the explicit controversial functionality of Clothoff.io.

The "absolutely free" aspect and "unbeatable speed" of alternatives like Muah AI demonstrate the rapid innovation and competition within the AI space. For users seeking AI image generation, these alternatives offer a different value proposition, focusing on efficiency and cost-effectiveness rather than explicit digital manipulation of existing images. This competition means that developers are "busy bees" constantly innovating, and users are always on the lookout for "what's new" in the realm of AI tools, regardless of their ethical implications.

The Business Model and Monetization of Clothoff.io

While the explicit business model of Clothoff.io is not fully detailed in public information, the phrase "payments to Clothoff" clearly indicates that the app operates on a monetization basis. This suggests a paid service, likely through subscriptions, one-time fees for image processing, or premium features that unlock higher quality results or faster processing times. Given the controversial nature of its service, a direct advertising model might be difficult to sustain with mainstream ad networks, pushing it towards direct user payments.

The existence of a company like Texture Oasis, a London-registered firm, further supports the notion of a structured business operation behind Clothoff.io. This firm likely handles the financial transactions, processing payments and potentially managing the infrastructure required to run an AI service that receives over 4 million monthly visits. The allure of such an app, despite its ethical baggage, indicates a demand that its creators are clearly capitalizing on. This commercial aspect underscores the motivation behind the app's development and the lengths taken to obscure identities, as significant profits could be at stake. The contrast with "absolutely free" alternatives like Muah AI also suggests a tiered market where users might pay for specific, controversial functionalities not offered by free, ethical tools.

Navigating the Digital Landscape: Protecting Yourself

In an era where AI-powered deepfakes are becoming increasingly sophisticated and accessible through apps like Clothoff.io, understanding how to protect oneself and others is paramount. The potential for harm, ranging from reputational damage to severe emotional distress, necessitates a proactive approach to digital literacy and online safety.

Firstly, developing a critical eye for digital content is essential. While deepfakes can be highly convincing, subtle inconsistencies in lighting, shadows, facial expressions, or even background details can sometimes reveal manipulation. Be skeptical of unexpected or sensational images, especially if they involve public figures or appear out of context.

Secondly, understanding and managing your digital footprint is crucial. Be mindful of the images you share online, and consider privacy settings on social media platforms. While it's impossible to completely control what happens to an image once it's public, reducing your exposure can mitigate risks.

Thirdly, for victims of deepfake abuse, immediate action is necessary. This includes reporting the content to the platform where it is hosted, seeking legal counsel, and documenting all instances of the deepfake. Organizations specializing in online harassment and digital rights can offer support and guidance. The legal landscape is evolving, and reporting these incidents helps build a case for stronger regulations and enforcement.

Finally, advocating for stronger regulations and ethical AI development is a collective responsibility. Supporting legislation that criminalizes non-consensual deepfakes and promotes transparency in AI-generated content is vital. Engaging in conversations about responsible AI and holding developers accountable for the tools they create can help shape a safer digital future for everyone. The phrase "get rid of unnecessary things safely and for free" takes on a new meaning when applied to the fight against harmful deepfakes; it's about removing dangerous content and ensuring digital spaces are safe and consensual.

The Future of AI-Generated Content and Deepfakes

The emergence and proliferation of apps like Clothoff.io represent a critical juncture in the development of artificial intelligence. On one hand, AI offers unprecedented opportunities for creativity, efficiency, and problem-solving across countless industries. On the other, its misuse, particularly in the realm of deepfakes, poses profound societal challenges that demand immediate and sustained attention.

The future of AI-generated content is likely to be characterized by a continuous arms race between generation and detection technologies. As AI models become more sophisticated, creating increasingly realistic fakes, so too will the tools designed to identify them. This ongoing technological evolution will necessitate constant vigilance from individuals, platforms, and regulatory bodies.

Furthermore, the conversation around AI ethics will intensify. There will be increasing pressure on AI developers to build in safeguards from the outset, prioritizing ethical considerations over raw capability. The concept of "responsible AI" will move from a niche discussion to a mainstream imperative, influencing how AI is designed, deployed, and governed. This includes greater transparency regarding the data used for training AI models and the potential biases or harmful capabilities they might acquire.

Ultimately, the trajectory of AI-generated content, including deepfakes, will depend on a delicate balance of technological advancement, robust legal frameworks, and widespread public awareness. While the allure of tools like Clothoff.io might attract millions of visits, the long-term societal cost of unchecked digital manipulation is too high. The collective responsibility lies in ensuring that AI serves humanity's best interests, fostering innovation while rigorously protecting privacy, consent, and truth in the digital age. We are "busy bees" in this digital ecosystem, and the choices we make today will shape "what's new" in the future of AI.

Conclusion

Clothoff.io stands as a stark reminder of the dual nature of artificial intelligence: a powerful tool capable of both immense good and profound harm. Its ability to "undress anyone using AI" has thrust it into the center of a global debate on privacy, consent, and the ethical boundaries of technology. As we've explored, the app's creators have gone to great lengths to disguise their identities, operating through entities like Texture Oasis, highlighting the challenges in holding such platforms accountable.

The ethical and legal minefield created by deepfake apps

Related Resources:

Detail Author:

- Name : Rhiannon Schultz

- Username : mae.christiansen

- Email : kendall.weissnat@moen.com

- Birthdate : 1972-09-13

- Address : 64377 Jaskolski Ranch Apt. 342 North Dorris, DE 64207

- Phone : (650) 868-4273

- Company : Bartoletti PLC

- Job : Homeland Security

- Bio : Voluptatem necessitatibus et odio non in perferendis. Et esse ipsam quod aut tenetur. Odit id est occaecati. Omnis mollitia vel in et laudantium dolor.

Socials

tiktok:

- url : https://tiktok.com/@theron1323

- username : theron1323

- bio : Quia quas blanditiis non odit non est est molestias.

- followers : 237

- following : 1577

linkedin:

- url : https://linkedin.com/in/theron5402

- username : theron5402

- bio : Eos omnis provident dolores autem sit aut vero.

- followers : 5331

- following : 438

facebook:

- url : https://facebook.com/windlert

- username : windlert

- bio : Cupiditate maxime aut quaerat inventore dolorem.

- followers : 1464

- following : 1016

twitter:

- url : https://twitter.com/theron3876

- username : theron3876

- bio : Dignissimos atque quia qui velit natus deleniti. Magni nihil possimus assumenda odio. Fugiat placeat nemo error quia.

- followers : 468

- following : 1991