In an era defined by rapid technological advancements, artificial intelligence continues to push boundaries, often venturing into territories that spark intense debate and ethical concerns. One such frontier is the emergence of "undress AI" tools – sophisticated applications designed to digitally remove clothing from images. While proponents might tout their creative potential or even privacy features, the underlying implications for personal privacy, consent, and societal well-being are profoundly unsettling and demand a thorough, critical examination.

This article delves deep into the phenomenon of undress AI, exploring its technological underpinnings, the ethical quagmire it presents, and the far-reaching consequences for individuals and digital society. We aim to shed light on the genuine risks associated with such tools, emphasizing the importance of digital literacy, robust legal frameworks, and a collective commitment to safeguarding personal integrity in the digital age. Understanding this technology is crucial, not to promote its use, but to arm ourselves with the knowledge needed to combat its potential misuse and advocate for a safer online environment.

Table of Contents

- Understanding Undress AI: What Is It?

- The Technology Behind the Transformation

- Claims vs. Reality: Speed, Privacy, and Quality

- The Alarming Ethical Landscape of Undress AI

- Non-Consensual Intimate Imagery (NCII): A Grave Threat

- Erosion of Trust and Digital Identity

- Legal Ramifications and Regulatory Challenges

- Existing Laws and Their Limitations

- The Global Fight Against Deepfake Abuse

- Societal Impact: Beyond the Individual

- Psychological Toll on Victims

- The Normalization of Digital Harassment

- Navigating the Future: Responsible AI and User Awareness

- Conclusion

Understanding Undress AI: What Is It?

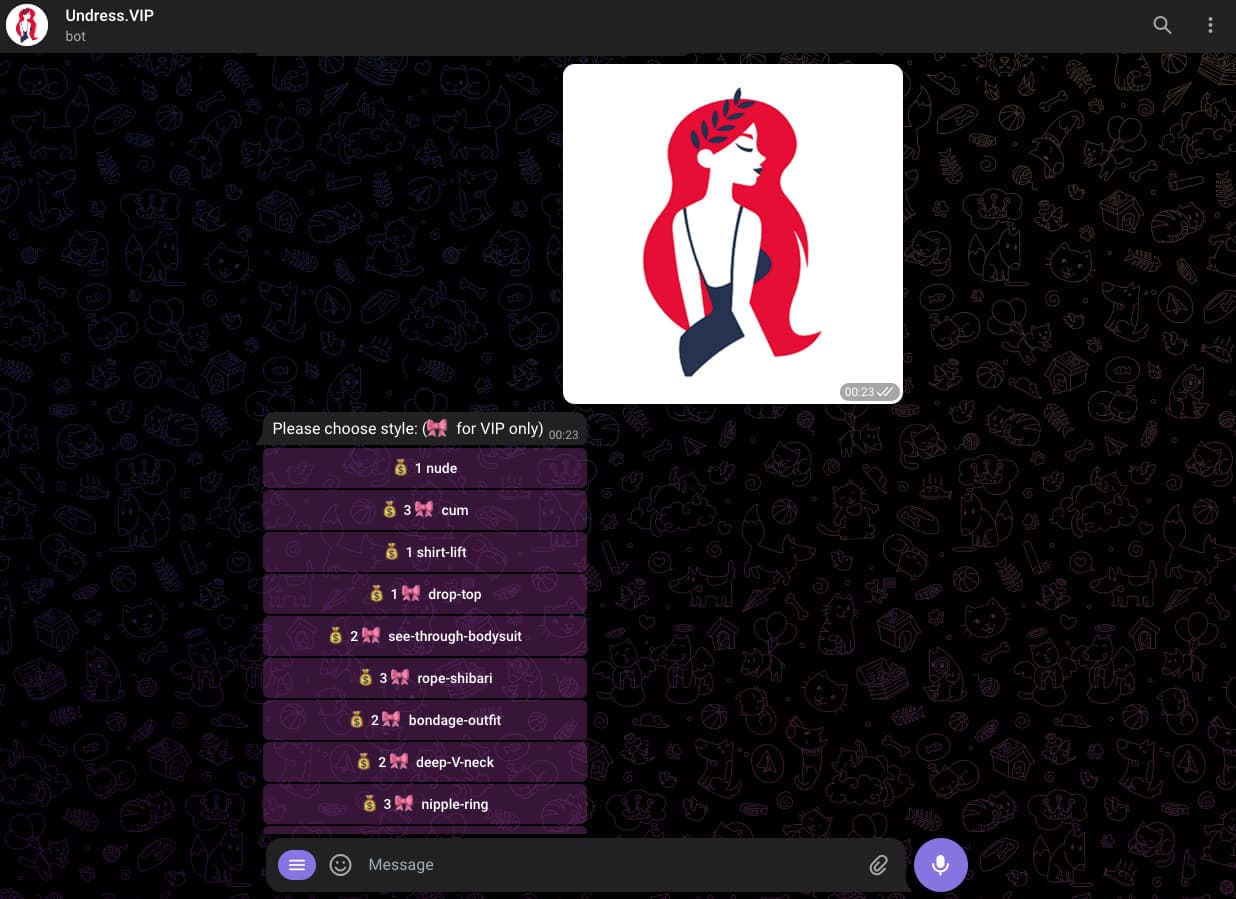

At its core, undress AI refers to a category of artificial intelligence applications and algorithms designed to manipulate images, specifically by digitally removing or altering clothing on a subject within a photograph or video. These tools leverage advanced machine learning techniques, often deep neural networks, to generate synthetic imagery that appears to show individuals without clothes, even if they were fully clothed in the original input. The concept isn't entirely new; photo manipulation has existed for decades. However, the advent of AI has democratized and automated this process, making it accessible to a broader audience with just a few clicks. The primary appeal, as marketed by some developers, lies in its supposed simplicity and efficiency. For instance, some tools boast "one-button AI clothing removal," emphasizing speed, quality, and even a misguided notion of "privacy" in their design. They claim to offer "unparalleled creative freedom" or "more control over your photos" by allowing users to "effortlessly eliminate unwanted clothing elements." While the technical capability to perform such transformations is undeniable, the ethical implications of this specific application of AI are profound and deeply concerning, especially when applied to images of real people without their explicit, informed consent. The technology itself is a neutral tool, but its application in the context of "undress AI" often veers into morally and legally perilous territory.The Technology Behind the Transformation

The magic behind undress AI lies in generative adversarial networks (GANs) and other deep learning models. Imagine two AI networks: one, the "generator," tries to create realistic images, and the other, the "discriminator," tries to tell if an image is real or fake. They learn by competing against each other. In the context of undress AI, the generator is trained on vast datasets of images – often comprising tens of thousands or even hundreds of thousands of real photos, including both clothed and unclothed individuals. For example, some tools claim to be "trained on 60,000 real photos." This extensive training allows the AI to learn patterns, textures, and anatomical structures associated with the human body. When a user uploads a clothed image, the AI analyzes it, identifies the clothing, and then, based on its learned knowledge, attempts to "fill in" the areas where clothing was present with synthetic skin, hair, or other body features. The goal is to create a seamless and convincing illusion that the person was never clothed. This process is incredibly complex, requiring sophisticated algorithms to maintain anatomical accuracy, lighting consistency, and realistic textures. The output can range from crude, obviously fake images to disturbingly realistic ones, depending on the sophistication of the AI model and the quality of the input image. This technological prowess, however, does not negate the ethical questions surrounding its application.Claims vs. Reality: Speed, Privacy, and Quality

Developers of undress AI tools often highlight features like "speed, privacy, and quality." Let's dissect these claims: * **Speed:** It's true that AI can process images and generate results incredibly fast, often within seconds or minutes. This automation is a significant departure from manual photo editing, which would take hours for a skilled artist. This speed contributes to the ease of misuse, as creating and disseminating such images becomes almost instantaneous. * **Privacy:** This is arguably the most misleading claim. While some tools might claim to process images locally or delete them after a short period, the very nature of "undress AI" inherently involves a massive invasion of privacy for the subject of the image. Even if the *tool* itself doesn't store the image, the *output* image, if created without consent, is a profound violation. Furthermore, the training data used for these AIs often consists of publicly available images, which may not have been given with consent for this specific type of generative manipulation. The "privacy" claimed by these apps typically refers to the user's data privacy *with the app*, not the privacy of the individual being depicted, which is the core ethical concern. * **Quality:** The quality of generated images has improved dramatically. Early versions of such AI-generated content were often easily identifiable as fakes due to distorted features, unnatural textures, or anatomical errors. However, with larger datasets and more advanced algorithms, the realism has become alarming. Some "undress app results" from popular tools can be highly convincing, making it difficult for an untrained eye to distinguish them from genuine photographs. This high quality amplifies the potential for harm, as realistic deepfakes are more likely to be believed and cause significant damage to a person's reputation and mental well-being. The very existence of "undressed women stock videos" further blurs the lines and provides a readily available source of content that could be manipulated or used to train such AIs.The Alarming Ethical Landscape of Undress AI

The ethical implications of undress AI are vast and deeply troubling, extending far beyond simple photo manipulation. This technology directly infringes upon fundamental human rights, including privacy, autonomy, and dignity. It represents a significant step towards the normalization of digital sexual harassment and the creation of non-consensual intimate imagery (NCII), often referred to as "revenge porn" when shared maliciously. The ease with which these tools can be used to create highly realistic fake images of anyone, without their knowledge or consent, is a moral catastrophe in the making. The primary ethical dilemma revolves around consent. When an undress AI tool is used on an image of a person without their explicit permission to be depicted in a nude or semi-nude state, it is an act of digital violation. This is not about artistic expression; it is about creating and potentially disseminating sexually explicit content that exploits an individual's image for voyeuristic or malicious purposes. The technology bypasses the need for physical interaction or even genuine access to intimate content, allowing for a pervasive and scalable form of digital abuse.Non-Consensual Intimate Imagery (NCII): A Grave Threat

The most immediate and severe ethical concern with undress AI is its direct contribution to the proliferation of Non-Consensual Intimate Imagery (NCII). NCII, or "revenge porn," involves the distribution of sexually explicit images or videos of individuals without their consent. Traditionally, this involved sharing real photos or videos. However, undress AI tools introduce a new, insidious dimension: the creation and dissemination of *fake* NCII. This means that even if a person has never taken or shared an intimate photo of themselves, their image can be digitally altered to create one, which can then be used to harass, blackmail, or humiliate them. The impact on victims of fake NCII is devastating. They face profound psychological distress, including anxiety, depression, paranoia, and even suicidal ideation. Their reputations can be irrevocably damaged, affecting their personal relationships, professional lives, and overall sense of safety and security. The digital nature of these images means they can spread rapidly across the internet, making removal incredibly difficult, if not impossible, and leaving victims feeling helpless and exposed indefinitely. The existence of such tools directly fuels this form of gender-based violence and digital abuse, making it a critical area of concern for cybersecurity experts, privacy advocates, and law enforcement agencies worldwide.Erosion of Trust and Digital Identity

Beyond the direct harm to individuals, the widespread availability and use of undress AI contribute to a broader erosion of trust in digital media and the concept of digital identity itself. In a world where AI can seamlessly "remove clothing from images with ease using clothoff.io" or similar platforms, how can anyone be sure that what they see online is real? This uncertainty has far-reaching implications: * **Disinformation and Misinformation:** The ability to create highly realistic fake images and videos (deepfakes) extends beyond explicit content. It can be used to fabricate political scandals, spread false narratives, or manipulate public opinion, making it harder to discern truth from fiction. * **Reputational Risk for Public Figures:** Celebrities, politicians, and public figures are particularly vulnerable, as their images are widely available. Fake intimate imagery can be weaponized against them, causing immense personal and professional damage. * **Paranoia and Self-Censorship:** Individuals might become more hesitant to share their photos online, fearing that their images could be digitally manipulated. This leads to a chilling effect on self-expression and participation in online communities. * **Difficulty in Proving Authenticity:** For victims of deepfake NCII, proving that an image is fake can be incredibly challenging, especially to those unfamiliar with AI manipulation. This places an unfair burden on the victim to defend their integrity against fabricated evidence. The very foundation of digital trust – the belief that images and videos represent reality – is undermined by technologies like undress AI. This erosion of trust makes all online interactions more precarious and creates a fertile ground for malicious actors to exploit.Legal Ramifications and Regulatory Challenges

The rapid advancement of undress AI technology has created a significant challenge for legal systems worldwide. Laws often lag behind technological innovation, and deepfake technology, especially in the context of NCII, is a prime example. While many jurisdictions have laws against the distribution of NCII, adapting these laws to specifically address AI-generated fake content requires careful consideration. The legal landscape is complex, varying significantly from country to country, and enforcement remains a considerable hurdle. The core legal issue is whether creating or sharing a synthetic image that depicts someone nude without their consent constitutes a crime, similar to sharing a real image. Many legal frameworks are designed around the concept of a "real" image or video. As AI-generated content becomes indistinguishable from reality, legal definitions need to evolve to encompass this new form of harm. Furthermore, identifying and prosecuting the creators and disseminators of such content is challenging due to the anonymous nature of the internet and the global reach of these tools.Existing Laws and Their Limitations

Currently, legal responses to undress AI and deepfakes vary: * **Specific Deepfake Laws:** A growing number of countries and U.S. states (e.g., California, Virginia, Texas) have enacted laws specifically criminalizing the creation or distribution of non-consensual deepfake pornography. These laws typically focus on intent to harm or harass. * **Existing NCII Laws:** In jurisdictions without specific deepfake laws, prosecutors may attempt to apply existing non-consensual intimate imagery laws. However, this can be problematic if the legal definition of "intimate image" requires it to be an authentic recording of the person. * **Defamation and Privacy Torts:** Victims might pursue civil lawsuits for defamation, invasion of privacy, or infliction of emotional distress. However, civil cases can be costly, time-consuming, and difficult to win, especially if the perpetrator is anonymous or located in another country. * **Copyright Infringement:** In some rare cases, if the original image used to create the deepfake is copyrighted, a copyright infringement claim might be possible, but this doesn't address the core harm to the individual's privacy and dignity. The limitations of existing laws highlight the urgent need for comprehensive, harmonized legislation that explicitly addresses the creation, distribution, and possession of non-consensual synthetic intimate imagery. Such laws must also consider the role of platforms that host or facilitate the spread of such content.The Global Fight Against Deepfake Abuse

The fight against deepfake abuse, including that facilitated by undress AI, is a global effort requiring collaboration among governments, tech companies, and civil society organizations. Key aspects of this fight include: * **International Cooperation:** Since deepfakes can cross borders instantly, international agreements and coordinated legal responses are crucial to effectively prosecute perpetrators and protect victims. * **Platform Responsibility:** Social media platforms, image hosting sites, and app stores have a critical role to play. They must implement robust content moderation policies, quickly remove NCII (both real and synthetic), and cooperate with law enforcement. Some platforms are already using AI to detect and flag deepfakes. * **Technological Solutions:** Developing counter-deepfake technologies, such as detection tools that can identify AI-generated content, is essential. Watermarking or digital provenance systems could also help verify the authenticity of media. * **Public Awareness and Education:** Educating the public about deepfakes, their dangers, and how to identify them is vital. Campaigns like "How to use undress in a sentence" or "The meaning of undress is to remove the clothes or covering of" can be repurposed to highlight the dangers of digital manipulation, rather than just definitions. * **Victim Support:** Providing resources and support for victims of deepfake abuse, including legal aid, psychological counseling, and assistance with content removal, is paramount. Organizations like the Cyber Civil Rights Initiative are crucial in this regard. The battle against deepfake abuse is ongoing, but a multi-faceted approach involving legal, technological, and educational strategies offers the best hope for mitigating the harm caused by tools like undress AI.Societal Impact: Beyond the Individual

The ripple effects of undress AI extend beyond individual victims, permeating the fabric of society and challenging our collective understanding of truth, consent, and online behavior. The widespread availability of tools that can "seamlessly remove clothing from images" contributes to a cultural environment where digital manipulation becomes normalized, and the boundaries of acceptable online conduct blur. This has profound implications for how we interact, trust information, and protect vulnerable populations. The existence of such technology also raises questions about gender equality and the continued objectification of women. Historically, women have been disproportionately targeted by NCII, and deepfake technology exacerbates this issue, providing a new, powerful weapon for misogynistic abuse. It perpetuates harmful stereotypes and contributes to a hostile online environment, particularly for women and girls. The societal impact is not just about individual harm, but about the degradation of digital spaces and the erosion of respect for personal autonomy.Psychological Toll on Victims

While already mentioned in the context of NCII, it's crucial to underscore the profound and lasting psychological toll on victims of undress AI-generated content. The experience is often described as a form of digital rape or violation, even though no physical contact occurred. Victims report: * **Severe Emotional Distress:** Intense feelings of shame, humiliation, anger, betrayal, and powerlessness. * **Anxiety and Depression:** Chronic anxiety, panic attacks, clinical depression, and in severe cases, post-traumatic stress disorder (PTSD). * **Social Isolation:** Fear of public judgment, leading to withdrawal from social activities, friends, and family. * **Damage to Self-Esteem and Body Image:** A profound sense of violation that can lead to long-term issues with self-worth and how they view their own body. * **Suicidal Ideation:** In extreme cases, the overwhelming distress and feeling of hopelessness can lead to thoughts of self-harm or suicide. The trauma is compounded by the persistent nature of digital content. Even if images are removed from one platform, they can resurface elsewhere, leading to a constant state of vigilance and fear. The psychological scars can last for years, requiring extensive therapy and support.The Normalization of Digital Harassment

The ease of access to undress AI tools and the perceived anonymity of the internet risk normalizing digital harassment. When such powerful tools are readily available, it lowers the barrier to entry for malicious behavior. What might start as "curiosity" or "experimentation" with an "AI clothes remover" can quickly escalate into a pattern of creating and sharing harmful content. This normalization can lead to: * **Increased Incidence of Abuse:** As the technology becomes more sophisticated and accessible, the number of individuals targeted by fake NCII is likely to increase. * **Desensitization:** Regular exposure to manipulated content can desensitize individuals to the severity of the harm it causes, making them less empathetic to victims. * **"Gamerification" of Abuse:** Some online communities might treat the creation of deepfakes as a game or challenge, further trivializing the real-world harm. * **Challenges for Parents and Educators:** Protecting children and young adults from this form of abuse becomes increasingly difficult, requiring new strategies for digital literacy and online safety education. Combating this normalization requires a concerted effort to educate the public, enforce strong legal penalties, and foster a culture of digital respect and empathy. It's crucial to understand that while a tool might be marketed for "creative freedom," its misuse for "undress AI" purposes is a form of digital harassment that must be condemned and actively fought against.Navigating the Future: Responsible AI and User Awareness

The existence of undress AI presents a stark reminder that technological progress must be accompanied by robust ethical considerations and responsible development. The future of AI hinges not just on what it *can* do, but what it *should* do. Navigating this complex landscape requires a multi-pronged approach, focusing on both the creators of AI and the users of digital technology. For developers, responsible AI means prioritizing ethical design from the outset. This includes implementing safeguards against misuse, refusing to develop applications that inherently facilitate harm, and considering the societal impact of their creations. It also means being transparent about the data used for training and the limitations of their models. For instance, if an AI is trained on "60,000 real photos," developers must ensure consent was obtained for such usage, or at least acknowledge the ethical implications if it wasn't. For users, awareness and critical thinking are paramount. We must cultivate a healthy skepticism towards digital content, especially images and videos that seem too good (or too bad) to be true. Understanding how undress AI and deepfake technology works empowers individuals to identify manipulated content and avoid becoming unwitting participants in its spread. This includes knowing that while "fix the photo body editor & tune" apps exist for legitimate photo editing, their "undress app" features can be exploited for malicious purposes. Ultimately, the future requires a balance: fostering innovation while rigorously protecting fundamental human rights. This means advocating for stronger laws, supporting ethical AI research, and empowering individuals with the knowledge to navigate an increasingly complex digital world.Conclusion

The emergence of "undress AI" tools represents a significant and troubling development in the landscape of artificial intelligence. While the technology itself showcases impressive capabilities in image manipulation, its application in digitally removing clothing from individuals without consent poses grave threats to privacy, dignity, and societal trust. We've explored how these tools operate, the misleading claims often made about their "privacy" and "quality," and most importantly, the devastating ethical and legal ramifications they unleash. From contributing to the proliferation of non-consensual intimate imagery (NCII) to eroding our collective trust in digital media, the impact of undress AI is far-reaching. It inflicts profound psychological trauma on victims and normalizes a dangerous form of digital harassment. The legal systems worldwide are grappling with how to adequately address this new form of abuse, highlighting the urgent need for comprehensive legislation and international cooperation. As we move forward, it is imperative that we prioritize ethical AI development, advocate for stronger protective laws, and foster greater digital literacy among the general public. The power of AI must be harnessed responsibly, ensuring that technological advancement serves humanity rather than enabling its exploitation. What are your thoughts on the ethical implications of undress AI? How do you think society should best respond to such technologies? Share your perspectives in the comments below, and consider sharing this article to raise awareness about this critical issue. For more insights into digital safety and AI ethics, explore other related articles on our site.Related Resources:

Detail Author:

- Name : Jennie McGlynn

- Username : giovanny.lind

- Email : henriette77@gmail.com

- Birthdate : 1994-07-31

- Address : 968 Muller Viaduct New Julien, OR 87332

- Phone : 323.468.4492

- Company : Hessel Inc

- Job : Electrical and Electronic Inspector and Tester

- Bio : Corporis est facere rem qui qui nesciunt. Nostrum voluptate et explicabo similique reprehenderit necessitatibus ut. Quae ut eum error repellat optio labore. Tempora corrupti dicta fuga libero.

Socials

linkedin:

- url : https://linkedin.com/in/elisabeth_collins

- username : elisabeth_collins

- bio : Sint dolorem pariatur et nisi consequatur dolore.

- followers : 6369

- following : 2401

tiktok:

- url : https://tiktok.com/@elisabeth_official

- username : elisabeth_official

- bio : Numquam ullam saepe est.

- followers : 6802

- following : 1419

instagram:

- url : https://instagram.com/collins1999

- username : collins1999

- bio : Nesciunt nisi quis officia omnis. Qui quas ut natus enim nihil.

- followers : 6091

- following : 445